This will take a while. Bear with me.

I’m obsessive about backing up my data. I don’t want to take the chance of ever losing anything important. But that doesn’t mean I’m a data hoarder. I like to think I’m pragmatic about it. And I don’t trust anyone else to do it for me.

From around 2006 to 2012, I kept a Mac mini attached to our TV with a Drobo hanging off the back. It had all our downloaded movies on it. And every night it would automatically download the latest releases of our favorite TV shows from Usenet so my wife and I could watch them with Plex the next day. It worked great, and all the media files were stored redundantly across multiple hard drives with tons of storage space. (Would it survive a house fire? No. But files like that weren’t critical.) But with the rise of streaming services and useful pay-to-watch stores like iTunes, now I’d rather just pay someone else to handle all of that for me. So, I don’t keep any media files like that locally any longer.

But my email? My financial and business documents? My family’s photo and home video archive? I’m really obsessive about that.

For most of my computing life, all of that data was small enough to fit on my laptop or desktop’s hard drive. In college, I remember burning a CD (not a DVD) every few months will all of my school work, source code, and photos on it for safe keeping. The internet wasn’t yet fast enough to make backing up to a cloud (were clouds even a thing back then?) feasible, so as my data grew I just cloned everything nightly to a spare drive using SuperDuper and Time Machine. It worked for the most part. Sure, I still worried about my house catching fire and destroying my backups, but there really wasn’t an alternative other than occasionally taking one of the backup drives to work or a friend’s house.

But then the internet got fast, really fast, and syncing everything to the cloud became easy and affordable. I was a beta user of Gmail back in 2004. I was an early paid subscriber of Dropbox since around 2008. All of my data was stored in their services and fully available on every computer and – eventually – mobile device. At the time, I thought I had reached peak-backup.

I was wrong.

Now we have too much data. My email is around 20GB. My family’s photo library is approaching 500GB. That’s more data than will fit on my laptop’s puny SSD. It will fit on my iMac, but it leaves precious available space for anything else. I could connect external drives, but that gets messy and further complicates my local backup routine. (Yes, Backblaze is a good, potential solution to that.)

Another problem is that most of our data now is either created directly in the cloud (email, Google Docs, etc) or is immediately sent to it (iPhone photos uploaded to iCloud and/or Google Photos), bypassing my local storage. If you trust Google (or Apple) to keep your data safe and backed up, that’s great. I don’t. I’ve heard too many horror stories about one of Google’s automated AI systems flagging an account and locking out the user. And with no way to contact an actual human, you’re dead in the water along with all your data. Especially if you lose access to your primary email account, which is the key to all your other online accounts.

So, I need a way to backup my newly created cloud data, too. This is getting complicated.

First step. My email. This is easy. Five years ago I setup new email addresses for my personal and business accounts with Fastmail. They’re amazing. I imported my 10+ years worth of email from Google (sadly, my pre-2004 college email and personal accounts are lost to the ether), setup a forwarding rule in Gmail, and with the help of 1Password, changed all of my online services to use my new email. It took about a month to switch everything over, but now the only email coming to my old Gmail address is spam. Fastmail keeps redundant backups of my email. And I have full IMAP copies available on multiple computers in case they don’t. And if something ever goes wrong, unlike Google where their advertisers are the customer – and I’m the product – I pay Fastmail every month and can call up a live human to talk to.

Source code. I’m a paying GitHub customer. Everything’s stored and backed up there. But still, what if they screw up. I ran a small, self-hosted server with GitLab on it for a while instead of GitHub and set it to backup all my code nightly to S3. That worked great. But, I like GitHub’s UI and feature set better. Plus, it’s one less server I have to manage. So, where do I mirror my code to? (Much of my code is checked out locally on my computer, but not all of it.)

Back in 2006, my boss at the web agency I was working at told me about rsync.net. They provide you with a non-interactive Unix shell account that you can pipe data to over SFTP, rysnc, or any other standard Unix tool. You pay by the GB/month, and they scale to petabyte sizes for customers who need that. So, I signed up and used them to backup all of my svn (remember svn?) repos. With the rise of git and switch to GitHub, I cancelled my account and mostly forgot about them.

But, aha!, I now have new data storage problems. Rsync.net could be a great solution again. So, I re-signed up and setup my primary web server to mirror all of my GitHub repos over to them each night. Here’s the script I’m using…

Next up, important documents. Traditionally, I’ve kept everything that would normally go in my Mac’s “Documents” folder in my Dropbox account. That worked great for a long time. But once I started paying Google for extra storage space for Google Photos (more on that later), it felt silly to keep paying Dropbox as well. So, after 10+ years as a paid subscriber, I downgraded to a free account and moved everything into Google Drive. Sure, it’s not as nice as Dropbox, but it works and saves me $10 a month.

Like I said above, I mostly trust Google, but not entirely. So, let’s sync my Google Drive’s contents to rsync.net, too. Edit your Mac’s crontab to add this line…

30 * * * * /usr/bin/rsync -avz /Users/thall/Google\ Drive/ [email protected]:google-drive

Also, I keep all of the really important paperwork that would normally be in a fire safe in my garage in a DEVONthink library so I can search the contents of my PDFs. It’s synced automatically with iCloud and available across my mobile devices. But still, better back that up, too.

45 * * * * /usr/bin/rsync -avz /Users/thall/FireSafe.dtBase2 [email protected]:

So, that’s all of my data except for the big one – my family’s photo and home video archives.

For a long time I kept all my family’s archives in Dropbox. I even made an iOS app dedicated to browsing your library. I could have stuck everything in Apple’s Photos.app where it’s available on my devices via iCloud, but that’s tied to my Apple ID. My wife wouldn’t be able to see those photos. Plus, any photos she took on her phone would get stored in her iCloud account and not synced with the main family archive. So, we used the Dropbox app, signed-in to my account, to backup our phones’ photos.

But, like I said earlier, our photo and video library become to big to comfortably fit in Dropbox. Plus, Google Photos had just been released and it was amazing. Do I like the thought of Google’s AI robots churning through my photos and possibly using that data to sell me advertisements? No. But, their machine-learning expertise and big-data solutions make it really hard to resist. So, I spent a week and moved everything out of Dropbox into Google Photos.

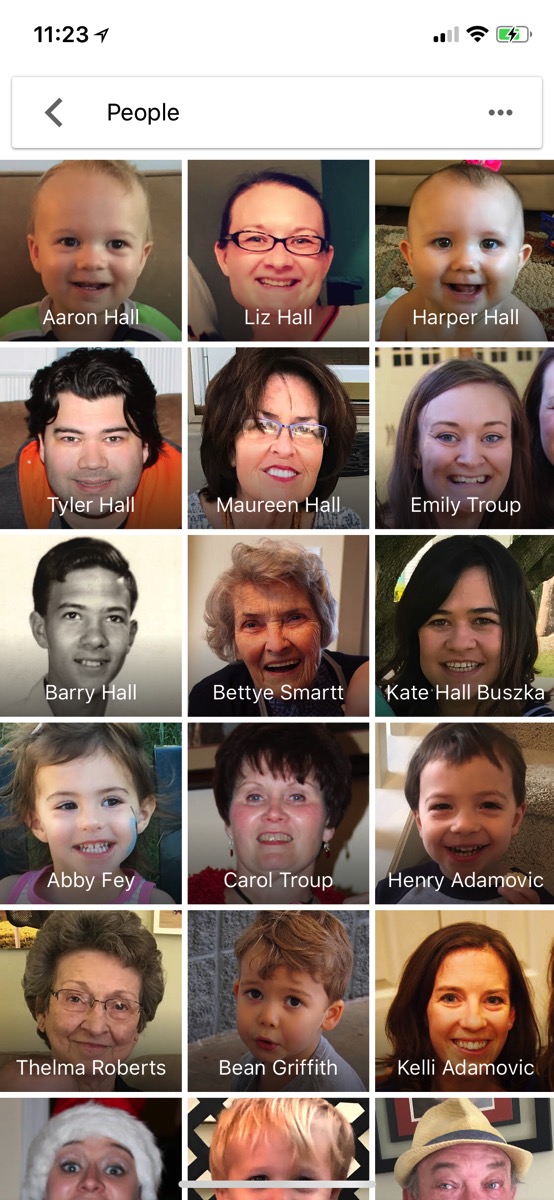

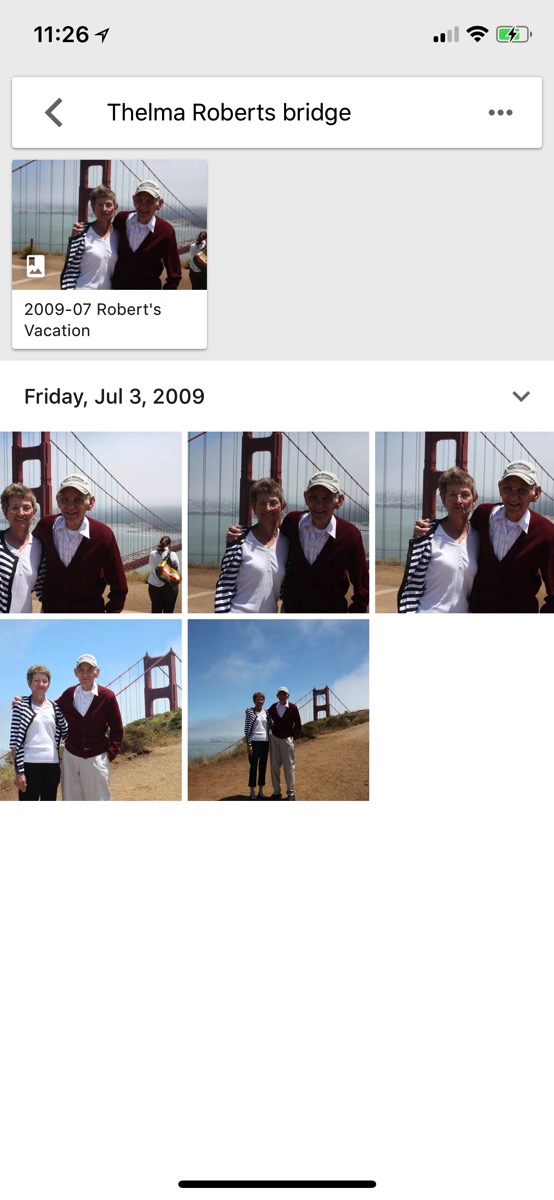

Now everything is sorted into albums, by date, and searchable on any device. I can literally type into their search box “all photos of my wife’s grandmother taken in front of the Golden Gate bridge” and Google returns exactly what I’m looking for. It’s wonderful.

My wife’s phone has the Google Photos app installed with my account on it so every photo she takes gets stored in a shared account we can both access and view on all our devices.

But what’s the recurring theme of this blog post? That’s right. I don’t fully trust any cloud provider to be the only source of my data. Someone clever said “the cloud is just someone else’s computer.” That’s exactly correct. If your data isn’t in at least two different places, it’s not really backed up.

But how do I backup my 500GB+ of photos that are already in Google’s cloud? And then how do I keep new items recently added synced as well?

As usual, I tried to find a way to make it work with rsync.net. I found a great open-source project called rclone. It’s a command line tool that shuffles your files between cloud providers or any SFTP server with lots of configurable options and granularity.

First off, even if rclone does do what I need, I can’t just run it on my Mac. My internet is too slow for the initial backup. I need to use it on one of my servers so I have a fast data center to data center connection between Google and rsync.net.

Getting it setup on one of my Ubuntu servers at Linode was a simple bash one-liner. Configuring it to then work with my Google and rsync.net accounts was just a matter of running their easy-to-use configuration wizard.

Note: rclone doesn’t support a connection to Google Photos. Instead, you need to login to Google Drive on the web and enable the “Automatically put your Google Photos into a folder in My Drive” option in Settings. (And also tell your Google Backup & Sync Mac app not to sync that folder locally – unless you have the space available – I don’t.) Then, rclone can access your Google Photos data via a special folder in your Drive account.

With everything configured, I ran a few connection tests and it all worked as expected. So, I naively ran this command thinking it would sync everything if I let it run long enough:

rclone copy -P "GoogleDrive:Google Photos" rsync:GooglePhotos

Things started out fine. But eventually, due to Google API rate limits, it was quickly throttled to 300KB/sec. That would have taken MONTHS to transfer my data. And, the connection entirely stalled out after about an hour. I even configured rclone to use my own, private Google OAuth keys, but with the same result. So, I needed a better way to do the initial import.

Google offers their Takeout service. It lets you download an archive of ALL your data from any of their services. I requested an archive of my Google Photos account and eight hours later they emailed me to let me know it was ready. Click the email link to their website, boom. Ten 50GB .tgz files. Now what to do with them?

I can’t download them to my Mac and re-upload them – that’s too slow. Instead, I’ll just grab the download URLs and use curl on my server to get them, extract them, and sync them over.

I don’t have enough room on my primary web server – plus I don’t want to saturate my traffic for any customers visiting my website. So, spin up a new Linode, attach a 500GB network volume, and we’re in business. Right? Nope.

The download links are protected behind my Google account (that’s great!) so I need a web browser to authenticate. Back on my Mac, fire up Charles Proxy and begin the downloads in Safari. Once they start, cancel them. Go to Charles, find the final GET connection, and right-click to copy the request as a curl command including all of the authentication headers and cookies. Paste that command into my server’s Terminal window and watch my 500GB archive download at 150MB(!!)/sec.

(Turns out, extracting all of those huge .tgz files took longer than actually downloading them.)

Finally, rsync everything over to my backup server.

And that’s where I currently am right now. Waiting on 500GB worth of photos and videos to stream across the internet from Linode in Atlanta to rsync.net in Denver. It looks like I have about six more hours to go. Once that’s done, the initial seed of my Google Photos backup will be complete. Next, I need a way to backup anything that gets added in the future.

Between the two of us, my wife and I take about 5 to 10 photos a day. Mostly of our kids. Holidays and special events may produce a bunch more at once, but that’s sporadic. All I need to do is sync the last 24 hours worth of new data once every night.

rclone is the perfect tool for this job. It supports a “–max-age=24h” option that will only grab the latest items, so it will comfortably fit within Google’s API rate limits. Once again, setup a cron job on my server like so:

0 0 * * * rclone copy --max-age=24h "GoogleDrive:Google Photos" rsync:GooglePhotos

And, that’s it. I think I’m done. Really, this time.

All of my important data – backed up to multiple storage providers – and available on all of my and my family’s devices. At least until the whole situation changes yet again.

A few more notes:

All of my web server configuration files are stored in git. As are all of my websites’ actual files. But, I still run an hourly cron job to backup all of “/var/www” and “/etc/apache2/sites-available” to rsync.net since it’s actually such a small amount of data. This lets me run one command to re-sync everything in the event I need to move to a new server, without having to clone a ton of individual git repos. (I know I need to learn a better devops technique with reproducible deployments like Ansible, Puppet, or whatever the cool tech is these days. But everything I do is just a standard LAMP stack (no containers, only one or two actual servers), so spinning up a new machine is really just a click in the Linode control panel and couple apt-get commands and dropping my PHP files into a directory.)

My databases are mysqldump’d every hour, versioned, and archived in S3.

All of the source code on my Mac is checked out into a single parent directory in my home folder. It gets rscyn’d offsite every hour, just in case. Think of it as a poor man’s Time Machine in case git fails me.

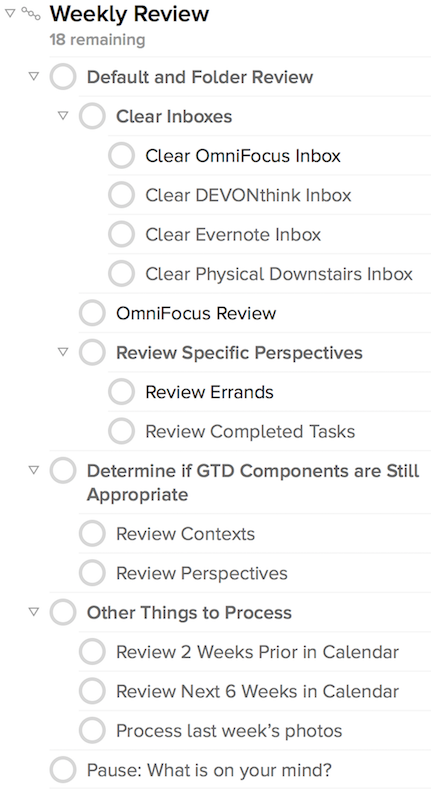

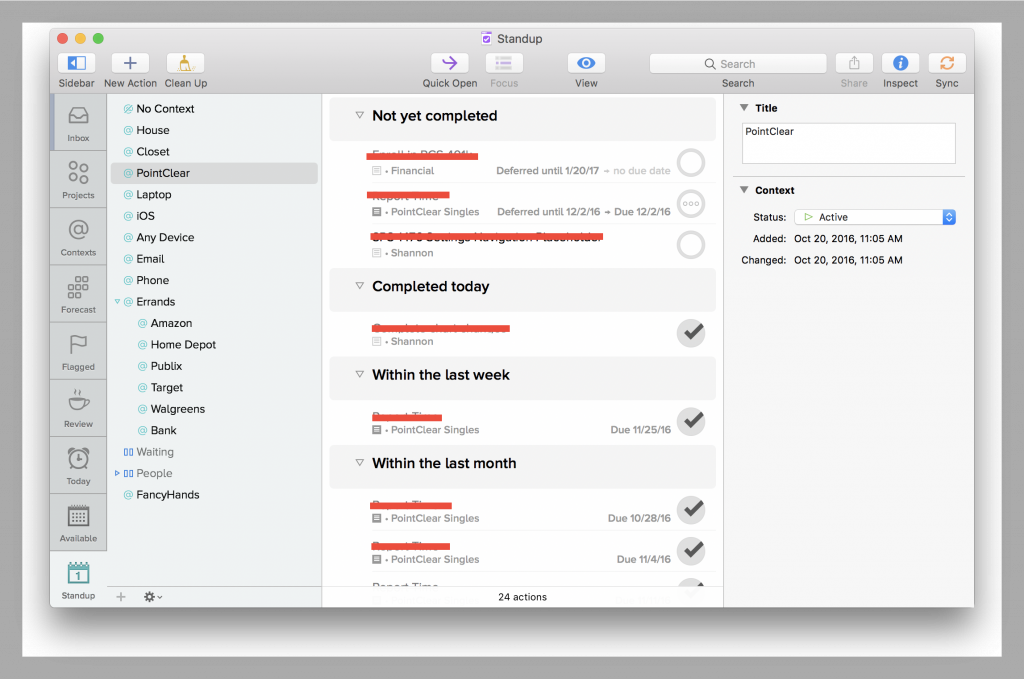

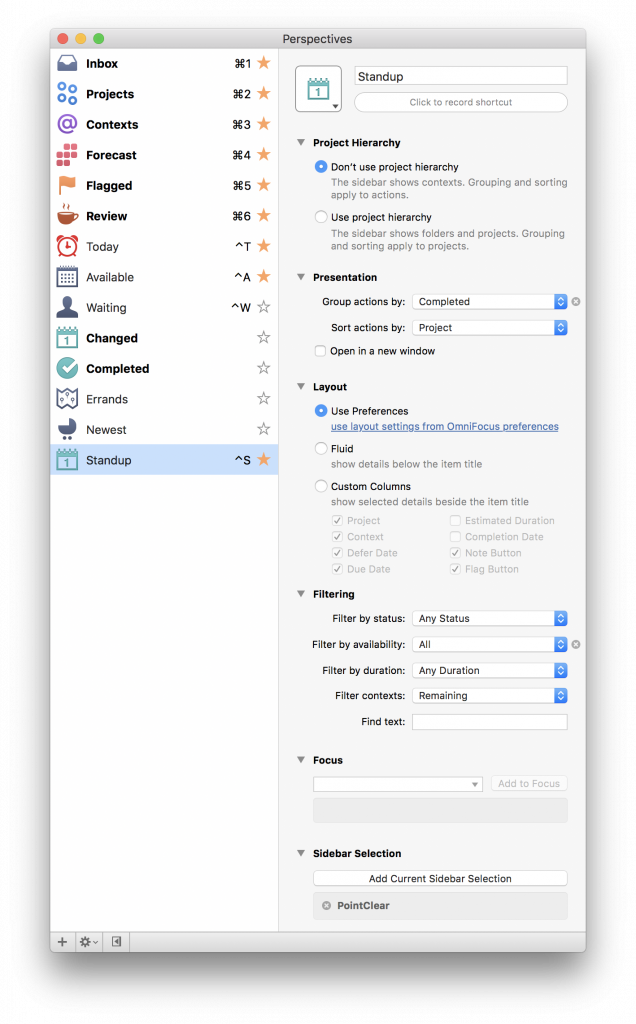

I do a lot of work in The Omni Group‘s apps – OmniFocus, OmniOutliner, and OmniGraffle. All of those documents are stored in their free WebDAV sync service and mirrored on my Mac and mobile devices.

All of my music purchases have gone through iTunes since that store debuted however many years ago. I can always re-download my purchases (probably?). Non-iTunes music ripped from CDs long ago, and my huge collection of live music, is stored in iTunes Match for a yearly fee. A few years ago when I made the switch to streaming music services and mostly stopped buying new albums, I archived all of my mp3s in Amazon S3 as a backup. I need to set a reminder to upload any new music I’ve acquired as a recurring task once a year or so.

Also, I have Backblaze running on my desktop and laptop doing its thing. So yeah. I guess that’s yet another layer of redundancy.