Clever solution from Daniel Jalkut of Red Sweater Software to speed up reading referral links pointing to your website. His script highlights the relevant sections so you can quickly scan down to what was said about you.

Using Amazon S3 as a Content Delivery Network

[Update: You might also be interested in s3up for storing static content in Amazon S3.]

Earlier this week I posted about my experience redesigning this site, focusing on optimizing my page load times using YSlow. A large part of that process involved storing static content (images, stylesheets, JavaScript) on Amazon S3 and using it like a poor man’s content delivery network (CDN). I made some hand-waving references to a deploy script I wrote which handles syncing content to S3 and also adding expiry headers and gzipping that data. A couple users emailed asking for more info, so, here goes.

Why Amazon S3?

Since its launch, nearly every technical blogger on the net has weighed in on why Amazon S3 is (for lack of a better word) awesome. No need for me to repeat them. I’ll just say quickly that it’s cheap (as in price, not in quality), fast, and easy to use. If you’ve got the deep pockets of a corporation backing you, you could probably find a better deal with another CDN provider, but for bloggers, startups, and small businesses, it’s the best game in town.

Amazon S3 is platform and language agnostic. It’s a massive harddrive in the sky with an open API sitting on top. You can connect to it from any system using just about any programming language. For this tutorial, I’ll be using a slightly modified version of the S3 library I wrote in PHP. I say “slightly modified” because I had to make a few changes to enable setting the expires and gzip headers. These changes will eventually make their way into the official project — I just haven’t done it yet.

The Deploy Process

YSlow recommends hosting static content such as images, stylesheets, and JavaScript files on a CDN to speed up page load times. It’s also best to give each file a far future expiration header (so the browser doesn’t try to reload the asset on each page view) and to gzip it. On a typical webserver like Apache, these are simple changes that you can do programatically through a config file. But Amazon S3 isn’t really a web server. It’s just a “dumb” storage device that happens to be accessible over the web. We’ll need to add the headers ourselves, manually, upfront when we upload.

The deploy script will also need to be smart and not re-upload files that are already on S3 and haven’t changed. To accomplish this we’ll be comparing the file on disk with the ETag value (md5 hash) on S3. Let’s get started.

Images

Deploying images is straight forward.

- Loop over every image in our

/images/directory. - Calculate the file’s md5 hash and compare to the one in S3.

- If the file doesn’t exist or has changed, upload it using a far futures header.

- Repeat for the next image.

Source:

<?PHP

$files = scandir(DOC_ROOT . IMG_PATH);

foreach($files as $fn)

{

if(!in_array(substr($fn, -3), array('jpg', 'png', 'gif'))) continue;

$object = IMG_PATH . $fn;

$the_file = DOC_ROOT . IMG_PATH . $fn;

// Only upload if the file is different

if(!$s3->objectIsSame($bucket, $object, md5_file($the_file)))

{

echo "Putting $the_file . . . ";

if($s3->putObject($bucket, $object, $the_file, true, null, array('Expires' => $expires)))

echo "OK\n";

else

echo "ERROR!\n";

}

else

echo "Skipping $the_file\n";

}

JavaScript and Stylesheets

The same process applies to JavaScript and stylesheets. The only difference is we need to serve gzip encoded versions to browsers that support it. As I said above, S3 won’t do this natively so we need to fake it by uploading a plaintext and a gzipped version of each file and then use PHP to serve the appropriate one to the user.

In the master config file on my website, I set a variable called $gz like so:

<?PHP

$gz = strpos($_SERVER['HTTP_ACCEPT_ENCODING'], 'gzip') !== false ? 'gz.' : '';

That snippet detects if the user’s browser supports gzip encoding and sets the variable appropriately. Then, throughout the site, I link to all of my JavaScript and CSS files like this:

<link rel="stylesheet" href="https://clickontyler-clickonideas.netdna-ssl.com/css/main.<?PHP echo $gz;?>css" type="text/css">

That way, if the $gz variable is set, it adds a “gz.” to the filename. Otherwise, the filename doesn’t change. It’s a quick way to transparently give the right file to the browser.

With that out of the way, here’s how I deploy the gzipped content:

<?PHP

// List your stylesheets here for concatenation...

$css = file_get_contents(DOC_ROOT . CSS_PATH . 'reset-fonts-grids.css') . "\n\n";

$css .= file_get_contents(DOC_ROOT . CSS_PATH . 'screen.css') . "\n\n";

$css .= file_get_contents(DOC_ROOT . CSS_PATH . 'jquery.lightbox.css') . "\n\n";

$css .= file_get_contents(DOC_ROOT . CSS_PATH . 'syntax.css') . "\n\n";

file_put_contents(DOC_ROOT . CSS_PATH . 'combined.css', $css);

shell_exec('gzip -c ' . DOC_ROOT . CSS_PATH . 'combined.css > ' . DOC_ROOT . CSS_PATH . 'combined.gz.css');

if(!$s3->objectIsSame($bucket, CSS_PATH . 'combined.css', md5_file(DOC_ROOT . CSS_PATH . 'combined.css')))

{

echo "Putting combined.css...";

if($s3->putObject($bucket, CSS_PATH . 'combined.css', DOC_ROOT . CSS_PATH . 'combined.css', true, null, array('Expires' => $expires)))

echo "OK\n";

else

echo "ERROR!\n";

echo "Putting combined.gz.css...";

if($s3->putObject($bucket, CSS_PATH . 'combined.gz.css', DOC_ROOT . CSS_PATH . '/combined.gz.css', true, null, array('Expires' => $expires, 'Content-Encoding' => 'gzip')))

echo "OK\n";

else

echo "ERROR!\n";

}

else

echo "Skipping combined.css\n";

You’ll notice that the first thing I do is concatenate all of my files into a single file — that’s another YSlow recommendation to speed things up. From there, we compress using gzip and then up the two versions. Looking at this code, there’s probably a native PHP extension to handle the gzipping instead of exec’ing a shell command, but I haven’t looked into it (yet).

Also, make sure and notice that I’m adding a Content-Encoding: gzip header to each file. If you don’t do this, the browser will crap out on you when it tries to read the file as plaintext.

And We’re Done

So those are the main bits of the script. You can download the full script (and the S3 library) from my Google Code project.

Building a Better Website With Yahoo!

It’s been a long time coming, but I finally pushed out a new design for this website last month. I rebuilt it from the ground up using two key tools from the Yahoo! Developer Network:

The new design is really a refresh of the previous look with a focus on readability and speed. I want to take a few minutes and touch on what I learned during this go-round so (hopefully) others might benefit.

Color Scheme

Although I really liked the darker color scheme from before, it was too hard to read. There simply wasn’t enough contrast between the body text and the black background. I tried my best to make it work — I searched around for various articles about text legibility on dark backgrounds. I increased the letter spacing, the leading, narrowed the body columns, and everything else I learned in the intro graphic design class I took in college. The results were better, but my gut agreed with all the articles I read online which basically said “don’t do it.” So I compromised and switched to a white body background, while leaving the header mostly untouched. I find the new look much more readable — hopefully this will encourage me to begin writing longer posts.

CSS and Semantic Structure

The old site was built piecemeal over a couple months and, quite frankly, turned into a mess font-wise. I had inconsistent headers, font-weights, and anchor styles depending on which section you were viewing. With the new design, I sat down (as I should have before) and decided explicitly on which font family, size, and color to use for each header. I specced out the font sizes using YUI’s percent-based scheme which helps ensure a consistent look when users adjust the size. (Go ahead, scale the font size up and down.) An added bonus was that it forced me to think more about the semantic structure of my markup. (If you have Firefox’s Web Developer toolbar installed, try viewing the site with stylesheets turned off.) If there’s one thing I learned working for Sitening, it’s that semantic structure plays a huge part in your SERPs.

Optimizing With YSlow

At OSCON last summer, I attended one of the first talks Steve Souders gave on YSlow — a Firefox plugin that measures website performance. That, plus working for Yahoo!, has kept the techniques suggested by YSlow in the back of my head with every site I build. But this redesign was the first time I committed to scoring as high as I could.

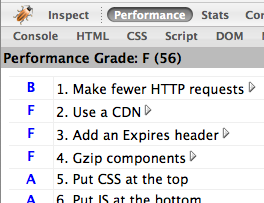

As usual, I coded everything by hand, paying attention to all the typical SEO rules that I learned at Sitening. Once the initial design was complete and I had a working home page, I ran YSlow.

Ouch. A failing 56 out of 100. What did YSlow suggest I improve? And how did I fix it?

- Make fewer HTTP requests – My site was including too many files. Three CSS stylesheets, four JavaScript files, plus any images on the page. I can’t cut down on the amount of images (without resorting to using sprites – which are usually more trouble than they’re worth), so I concatenated my CSS and JS into single files. That removed five requests and brought me up to an “A” ranking for that category. (I’m further toying with adding the YUI Compressor into the mix.)

- Use a content delivery network – At Yahoo! we put all static files on Akamai. Other large websites like Facebook, Google, and MySpace push to their own CDNs, too. But what’s a single developer to do? Use Amazon S3 of course! I put together a quick PHP script which syncs all of my static content (images, css, js) and stores them on S3. Throughout the site, I prepend each link with a PHP variable that lets me switch the CDN on or off depending on if I’m running locally or on my production server. (And, in the event S3 ever goes down or away, I can quick switch back to serving files off my own domain.)

- Add expiry headers – Expiry headers tell the browser to cache static content and not attempt to reload it on each page view. I didn’t want to put a far future header on my PHP files (since they change often), but I did add them to all of the content stored on S3. This is fine for images that should never change, but for my JavaScript and CSS files it means I need to change their filename whenever I push out a new update so the browser knows to re-download the content. It’s extra work on my part, but it pays off later on.

- Gzip files – This fix comes in two parts. First, I modified Apache to serve gzipped content if the browser supports it (most do) — not only does this cut down on transfer time, but it also decreases the amount of bandwidth I’m serving. But what about content coming from S3? Amazon doesn’t support gzipping content natively. Instead, in addition to the static files stored there, I also uploaded their gzipped counterparts. Then, using PHP, I change the HTML links to reference the gzip versions if I detect the user’s browser can handle it.

- Configure ETags – ETags are a hash provided by the webserver that the browser can use to determine if a file has been modified before downloading it. Amazon S3 automatically generates ETags for every file — it’s just a free benefit of using S3 as my CDN.

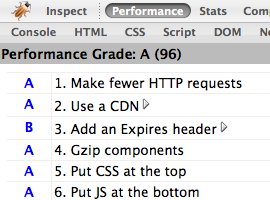

So, all of the changes above took about three hours to implement. Most of that time was spent writing my S3 deploy script and figuring out how to make Amazon serve gzipped content. Was it worth it? See for yourself.

Wow. Three short hours of work and I jumped to a near perfect 96 out of 100. The only remaining penalty is from not serving an expires header on my Mint JavaScript.

Do these optimization techniques make a difference? I think so. Visually, I can tell there’s a huge increase in page rendering time on both Firefox and Safari. (IE accounts for 6% of my traffic, so I don’t bother testing there any longer.) More amazing, perhaps, is the site’s performance on iPhone. The page doesn’t just load — it appears.

I’ve made a bunch of vague references to the S3 deploy script I’m using and how to setup gzip on Amazon. In the interest of space, I’ve left out the specifics. If you’re interested, email me with any questions and I’ll be happy to help.

Dark City Director’s Cut

One of the best movies ever made just got even better.

The original 1998 release ran 96 minutes and the new director’s cut is about 15 minutes longer, clocking in at 111 minutes. The new cut supposedly has improved special effects and a new and improved sound mix . . . the new release also features two additional commentary tracks. —via /Film

I can’t recommend Dark City highly enough. If you’ve never seen it, go rent the DVD. After you watch it, start over and see it again with Roger Ebert’s commentary turned on.

Mercury Mover

MercuryMover is a System Preference pane that lets you move and resize windows using only the keyboard. Awesome. Add that to an already lethal TextMate + Quicksilver combination, and I’m inching closer to my dream of unplugging my mouse forever. —via MacOSXHints

Holy Crap! Mint Plugin

Here’s a quick Pepper plugin called Holy Crap! I wrote for Shaun Inman’s Mint software. The idea is simple: it sends you an email alert whenever it detects your site has become popular on del.icio.us, Digg, etc. Think of it as an early warning system.

It’s easy to add your own warnings to the Pepper. Out of the box it looks for traffic coming from the popular site lists on

- del.icio.us

- Digg

- Slashdot

- and (why not?) Daring Fireball

Why the name Holy Crap!? Because that’s generally the first thing you say when your website hits the front page of Slashdot.

I just finished writing it earlier this afternoon, so I haven’t given it a super-thorough testing just yet. But it appears to be working ok so far. Still, send in any bugs you find.

Bank of America RSS Feeds

Bank of America has a great online banking system. It’s why I switched to them three years ago. I’ve often wanted them to provide an RSS feed of recent transactions on my account — I’ve emailed them multiple times, but no such luck. So, today I finally got around to doing what I always do — I wrote a script to scrape their website and return the data in the format I want.

Honestly, it’s one of the more complex scraping scripts I’ve written. Their sign-on process involves login tokens, variable URLs, and three challenge questions in addition to entering your passcode. In the end I think it was worth the time. Seeing my cleared and pending transactions in NetNewsWire is awesome.

Before I give out the link to the script, I want to take a moment and emphasize that this could be a potentially huge security risk. This script requires you store your login credentials and the answer to all three of your security questions in plain text. I recommend only running it locally on your own computer. Store it on a public web server at your own risk! Definitely don’t store it on a shared host!!

You can download the script here.

Keep an eye out on this blog — I’ll post updates if/when Bank of America modifies their site and my scraping code breaks. Feel free to email me with any questions.

Managing Exchange Invites in Apple’s iCal

For better or worse, most of my coworkers live and die by their Exchange calendars. Unfortunately, as a developer working on a Mac 24/7, there aren’t many options for dealing with the barrage of Outlook invites I receive each day. I can either use Entourage which only kinda-sorta-works, or I can just deal with it and transcribe each invite manually into iCal or Google calendar. Both options are crap. (Groupcal from Snerdware used to be an option, but it doesn’t support Leopard and their developers appear to have stopped work on the product.) There’s no reason OS X shouldn’t be a full-fledged citizen in an Exchange environment. But, until that happens, here’s a collection of ever-improving AppleScript and PHP hacks I’ve written to make life in an Exchange world a little bit better

There are three problems we need to solve

- Viewing a user’s free/busy calendar

- Easily adding Exchange invites to iCal

- And accepting or declining those invites

My solution is a set of four Applescripts and a PHP script that (for me at least) make this nearly seamless. The bulk of the code is a PHP class called OWA which interacts with Outlook Web Access – a prerequisite for all of this working. Nearly all organizations which run Exchange have this enabled, so it shouldn’t be a problem for most people. The Applescripts are primarily just glue which pull information from Mail and pipe it into the PHP script for processing.

1. Viewing a User’s Free / Busy Schedule in iCal

I’ve placed the OWA PHP script in my Mac’s local web root. To subscribe to a user’s free / busy calendar I choose Subscribe from iCal’s Calendar menu and use the following URL:

http://localhost/owa.php?user=<username>

Where <username> is someone’s Exchange username. Behind the scenes, the PHP script logs into Outlook Web Access and scrapes the user’s calendar info and returns it as a properly formatted iCalendar file which iCal loads.

I’m the first to admit that this isn’t the most user-friendly solution, but it works. I stay subscribed to my manager and nearby coworkers’ schedules since they’re who I typically schedule meetings with. If I need to see someone more exotic, I just create a new calendar for them temporarily and delete it when I’m done.

2. Easily adding Exchange invites to iCal

To move Exchange invites from Mail into iCal, I use this AppleScript. Warning: I’m hardly an AppleScript expert, so suggestions are very much welcome.

tell application "Mail"

set theSelectedMessages to selection

repeat with theMessage in theSelectedMessages

set theAttachment to first item of theMessage's mail attachments

set theAttachmentFileName to "Macintosh HD:tmp:" & (theMessage's id as string) & ".ics"

save theAttachment in theAttachmentFileName

do shell script "fn='/tmp/$RANDOM.ics';cat " & quoted form of POSIX path of theAttachmentFileName & "| grep -v METHOD:REQUEST > $fn;open $fn; rm " & quoted form of POSIX path of theAttachmentFileName & "; exit 0;"

end repeat

end tell

In a nutshell, that grabs the first attachment from the currently selected Mail message (I blindly assume it’s an invite attachment), removes the line that prevents iCal from editing the event, and tells iCal to open it. At that point iCal takes over and asks you which calendar to add the event to.

It’s important to note that while the event is now stored in your local iCal calendar, it has not been marked as accepted or declined in your Exchange calendar. You need to specifically take that action using one of the next scripts.

3. Accepting or Declining Exchange Invites in Mail

To accept or decline an event (or even mark it as tentative) I’ve provided three scripts called “Accept Invite”, “Decline Invite”, etc. that pass the event to the OWA PHP script which then marks the event as such in your public calendar. Here’s the “Accept Invite” AppleScript. The other two are basically identical.

tell application “Mail” set theSelectedMessages to selection repeat with theMessage in theSelectedMessages set theSource to content of theMessage set theFileName to “Macintosh HD:tmp:” & (theMessage’s id as string) & “.msg” set theFile to open for access file theFileName with write permission write theSource to theFile starting at eof # close access theFile do shell script “curl http://localhost/owa.php?accept=” & POSIX path of theFileName end repeat end tell

Again, I make no claims about the quality of my AppleScript – I just know that this works well enough to get the job done.

Closing Notes

And there you have it. To sum up:

- Download my scripts

- Place owa.php into your local web root

- Place the four Applescripts into

~/Library/Scripts/Applications/Mail

After that, it’s up to you to call those AppleScripts as needed. I use Red Sweater Software’s Fast Scripts application to assign keyboard shortcuts to them. You could also use Quicksilver or whatever method works best for you.

Like I sad above, these scripts are constantly in flux as I revise and improve them. I’ll post any significant updates to this blog – and I hope you’ll email me with any questions you have or improvements you make.

Microsoft and Yahoo! – My Take

A favorite theory among tech pundits is that the company that will eventually dethrone Google doesn’t exist yet. Rather, it’ll be the brainchild of former Googlers who quit when their current job becomes boring or their stock options vest. Imagine a mass exodus – hundreds of very rich, very smart engineers with nothing but free time and creativity on their hands.

I like that idea. It has a certain romanticism to it. But what if the next, great tech giant doesn’t come from a Googler – but a Yahoo?

If (when) Microsoft buys Yahoo!, how many developers will stick around for what is sure to be a righteous clash of cultures? In his letter to Yahoo!, Steve Ballmer writes that Microsoft intends “to offer significant retention packages to [Yahoo!’s] engineers.” Forty-four billion dollars is certainly enough to buy the company. But does Microsoft have enough money to buy the loyalty of Yahoo!’s engineers? Many of whom are working at Yahoo! precisely because they don’t want to work for a company like Microsoft.

Could this be the deal that launches a thousand Silicon Valley startups? There’s no shortage of creativity brewing at Yahoo!. Attend any one of their internal Hack Days and you’ll find a wealth of amazing ideas and side-projects just waiting for the right opportunity. How many of those could turn into successful businesses if they were taken outside the company? I’d wager quite a few. And how many of those could turn into the Next Great Thing?

There’s bound to be at least one.

I have no doubt Steve Ballmer and the rest of Microsoft have examined every conceivable financial outcome of buying Yahoo!. I’m sure they’ve given great thought to how the two companies will combine their infrastructures. I’m sure there has been much talk of “synergy.” But as it’s become increasingly clear that Microsoft just doesn’t get Web 2.0 and the disruptive power a social network can wield, I wonder if they understand the implications of a thousand new startups — each working in parallel to finish the job that Google started.

Uncov Nails It

Uncov is good. But sometimes they’re great.

The proliferation of stupid is the cancer that is killing the internet. In the quest to re-implement every conceivable desktop application in Ajax, you mental midgets are setting computing back 10 years. The worst part about it is, you think that you’re innovating.